With Windows Server 2016 we got SDNv2 that is the second generation of the Microsoft Software-defined Networking for Hyper-V, if you to know more about SDNv2 check the Microsoft Docs. To make it easier to validate and test SDNv2 Microsoft has created a scripts repo to get you started. The SDNexpress scripts can be used to deploy SDNv2 with or without VMM on four or more Hyper-V hosts in a single rack/cluster/scale unit.

But what if you don’t have four spare servers to test this out? Don’t fear nested Hyper-V is here! With Windows Server 2016 we also got the ability to run multiple Hyper-V hosts nested on a single physical host, this is the perfect tool for labs and testing.

Jaromir Kaspar from Microsoft has created a awesome toolkit to quickly spin up a nested lab with a domain controller, optional with VMM and a bunch of hosts, it also contains a great variety of scenarios to test out different configurations for Windows Software Defined-datacenter and many more.

A few months ago I added my first contribution to this project, a scenario to deploy a full VMM managed SDN fabric using nested Hyper-V on a single server with less than 10 minutes of work effort.

With the latest updates to the SDNExpress scripts from Greg Cusanza I decided also to create a scenario for deploying SDNv2 without VMM.

To get started you need to prepare a few things

-

A Hyper-V Hosts with 100GB of free memory and 300GB disk space

-

A Windows Server 2016 or 2019 ISO

-

Windows Admin Center 1806+

Jaromir already created some good guidance one how to use his toolkit to spin up the initial VMs, just follow step 1 – 7 to spin up the Hyper-V hosts, DC and a management server using the labconfig file content from the SDNScenario.

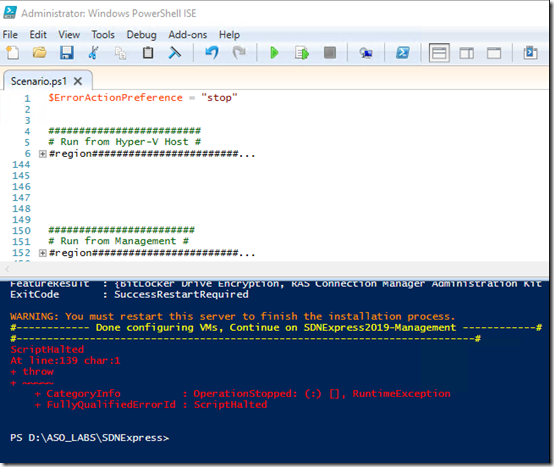

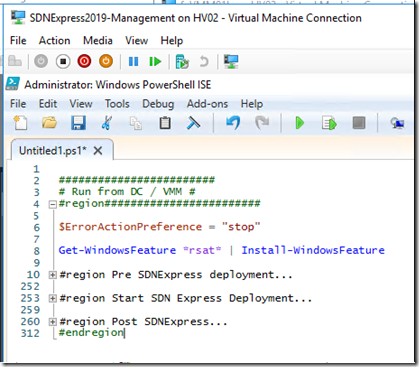

When you have created the DC, the Management VM and the four Hyper-V hosts it is time for my script to do it’s magic! Copy the scenario script into the same folder as the lab scripts and labconfig.ps1 file and and right-click it and select edit to open it in PowerShell ISE, the script is divided into two regions the first part you have to run on the Hyper-V hosts that the lab is deployed on, the second part you have to copy into the Management VM.

For the first part of the script that you execute on the Hyper-V host if the script cannot find the VHDx in ParentDisks folder you will be prompted to select a VHDX to use for deploying the SDN components, simply just use the same Core VHDX file as the lab already created to deploy the nested hosts inside the ParentDisks folder. Second you will be prompted to select the MultiNodeConfig.psd1 file that is a part of the scenario repository, this file contains all the information need for the SDNexpress deployment. Finally you are prompted for the Windows Admin Center MSI installer. The script will now start all the VMs for the lab, copy all the files need into the Management VM and install the RSAT tools.

It is then time to login to the Management VM and use the second part of the scenario script.

The second part the scenario is sorted in three regions, the first region is preparing a few accounts and groups for SDN, configuring the hosts for Hyper-V and creates a Storage Spaces Direct cluster.

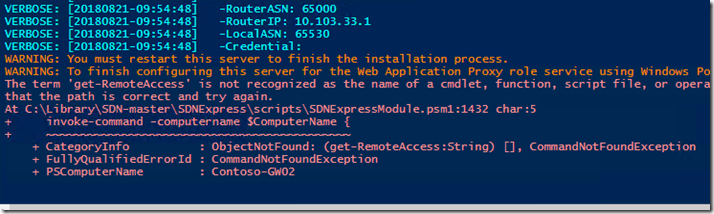

The second region is running the SDNExpress deployment script. This part can be a bit tricky on nested environments as there sometimes is a few timing issues. The first known issue is that sometimes the SDN VMs is not join to the domain, especially the gateway VMs seems to have problems, if this happens use the Hyper-V Manager console on the Management VM to connect to HV1 or HV2 or HV3 and domain join the VMs manually with SCONFIG to corp.contoso.com.

The second known issue is that some times doing deployment the SLB MUXs is timing out on WinRM, if this happens just rerun the SDNExpress deployment script again and it should continue, the SDNExpress script is made to be rerun if any errors occur.

![clip_image001[6] clip_image001[6]](https://cloudmechanic.net/wp-content/uploads/2018/08/clip_image0016_thumb.png?w=675&h=250)

The third known issue is that the Gateways needs to be rebooted after RemoteAccess is installed, if this happens use the Hyper-V Manager console on the Management VM to connect to HV1 or HV2 and restart the related Contoso-GW VM, then rerun the SDNExpress deployment script.

The last region is configuring the BGP peering on the router (DC) and installs Windows Admin Center and Google Chrome on the Management VM.

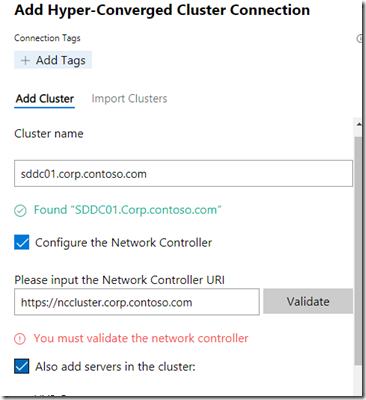

When the script is completed successfully open Google Chrome on the Management VM and go to https://localhost:9999/ to open Windows Admin Center. Click on Add and select Hyper-Converged Cluster and enter the cluster name and network controller URL as shown below.

Click on the validate button to validate the network controller connection, if prompted click on Install RSAT-NetworkController and validate again, and then add the cluster

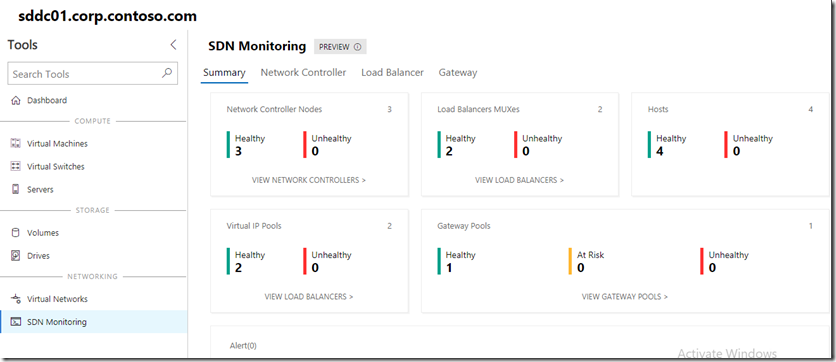

Click on the hyper-converged cluster sddc01.corp.contoso.com and you should now see the cluster dashboard, go to SDN Monitoring to check the health of your SDN environment, if not already connected to the network controller enter the name of one of the controllers to continue and you should now see a health and happy SDN environment ready to play.

If everything is green and health you can now start building virtual networks and VMs, provision a gateway for the virtual network and many other things. More on this later…

![clip_image001[3] clip_image001[3]](https://cloudmechanic.net/wp-content/uploads/2017/12/clip_image0013_thumb.png?w=356&h=249)